15 Root Finding and Optimization Algorithms

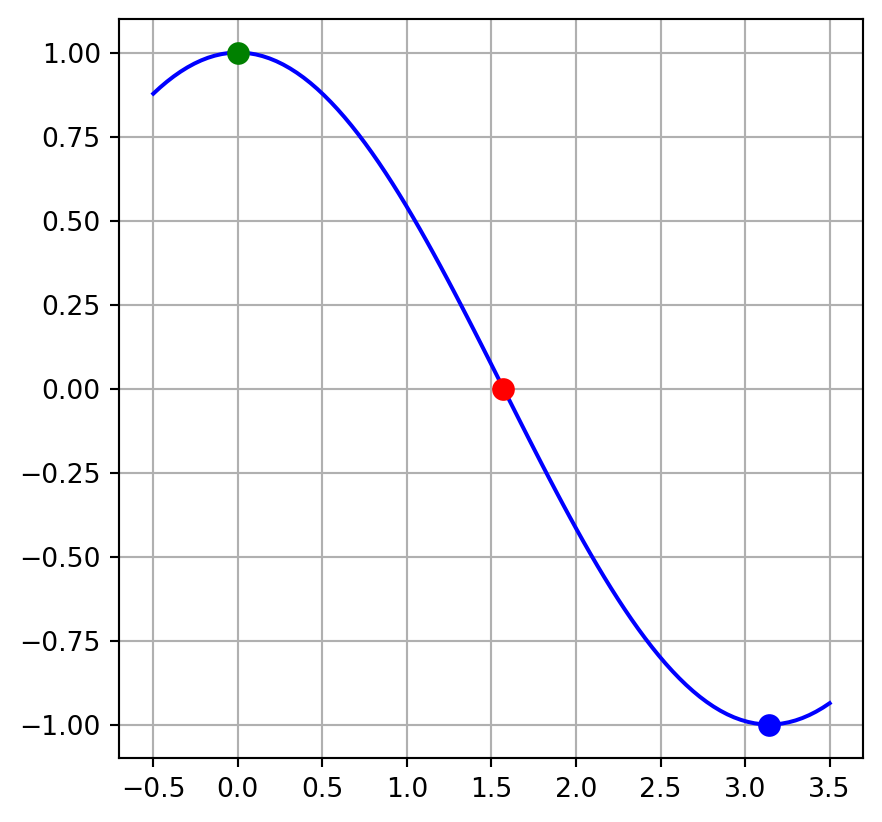

Often in science, we find ourselves wanting to do one of the following: (See figure below)

- Solve an equation for a specified variable.

- Find the zeros of a function (equivalent to solving an equation that is equal to zero).

- Find the extrema (maxima or minima) of a function.

These skills are routinely covered in an algebra class but often the equation we encounter cannot be solved using standard algebraic techniques. Instead we have to resort to a class of numerical algorithms generally referred to as root finding or optimization algorithms. In the remainder of this chapter we will discuss various numerical methods for finding the roots of an equation. The algorithms described below can be used to perform any of the tasks mentioned above.

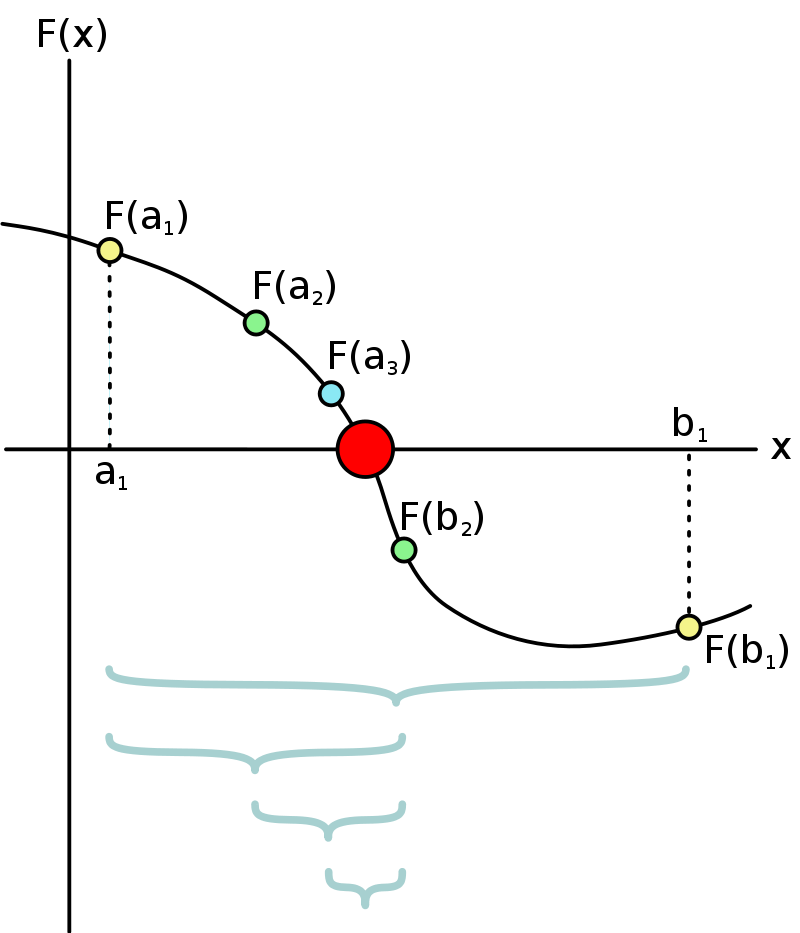

15.1 Bisection

The bisection method finds the roots of a function by iteratively narrowing the interval where the zero is found until the interval becomes sufficiently small. If you want to find the location where the function equals something other than zero, you can simply rearrange the function until a zero appears on the right hand side of the equation and proceed with bisection on the new function. To begin the bisection method, the user must choose an interval wherein the function zero is guaranteed to be found. The midpoint of the interval is then found and a new, narrower interval is chosen. By repeating this process until the interval becomes sufficiently small, the algorithm eventually finds the location where the function is equal to zero. The steps to the algorithm are as follows:

- Choose an interval wherein the function zero is certain to be located. Call the interval \((a,b)\)

- Calculate the midpoint of the interval \(c = {a+ b \over 2}\)

- Calculate the function value at the midpoint (\(f(c)\)).

- By examining the sign of \(f(c)\) determine whether the interval \((a,c)\) or \((c,b)\) contains the zero of the function.

- Repeat steps 2-4 until \(f(c)\) is sufficiently small.

In the code cell below, try to use the bisection method to solve the following equation. \[ y(x) = 5 x^2 - 3x - 2 = 0 \]

# Code here15.2 Newton’s Method

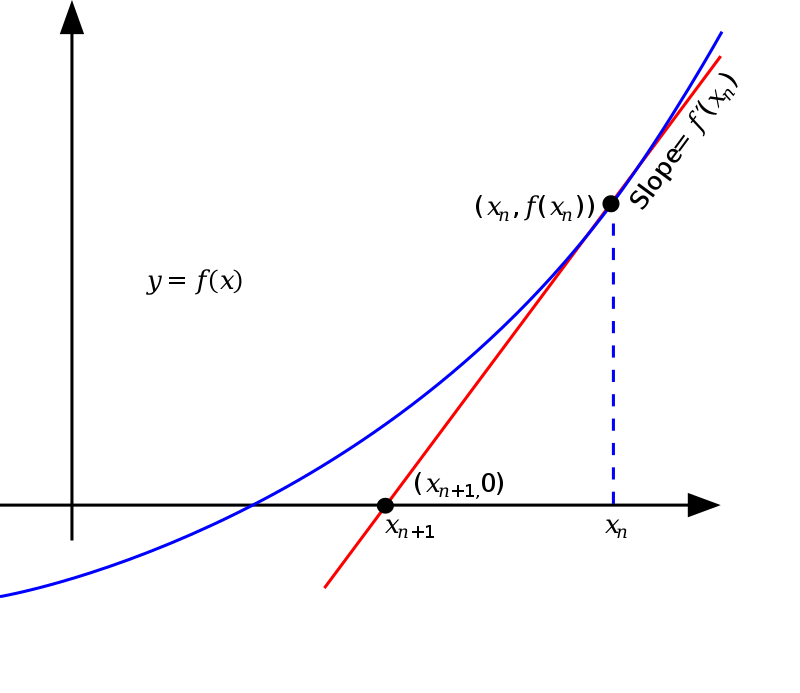

Newton’s method uses the slope of the function to estimate a better approximation to the function’s zero. To understand how we can use the slope to approximate the zero, first let’s write down an approximation to the slope of a function \(f(x)\).

\[ f'(x_0) \approx {f(x_1) - f(x_0) \over x_1 - x_0} \]

If the slope of the function were a constant, we could simply set \(f(x_1) = 0\) and solve for \(x_1\) and we would be done.

\[ x_1 = x_0 - {f(x_0)\over f'(x_0)} \]

In reality, the function will not have a constant slope but this equation will still get us closer to the zero than we were originally. (see figure) By repeatedly evaluating the equation above, always using the most recent estimate as \(x_0\), until \(f(x_0)\) becomes sufficiently small, the location where the function is zero can be found. \[ x_{n+1} = x_n - {f(x_n)\over f'(x_n)} \]

To begin the algorithm, the user must choose an initial guess for the zero that isn’t very far away from the true value. An improvement on this initial guess is calculated using the equation above where \(f'(x_n)\) is the derivative of the function evaluated at the current guess. The algorithm continues with repeated evaluation of the equation above until \(f(x_n)\) evaluates to a sufficiently small number.

Below you will find a code example for solving the following equation:

\[ y(x) = \cos(x) = 0 \]

import numpy as np

x = 2.3

while abs(np.cos(x)) > 1e-5:

x = x + np.cos(x)/np.sin(x)

print(x,np.cos(x))1.5707963256833193 1.1115773359029342e-09If the function of interest has multiple zeros (like the cosine function we did above), one must give careful consideration to the initial guess. Making a poor choice can lead to the algorithm finding a different zero than what was intended.

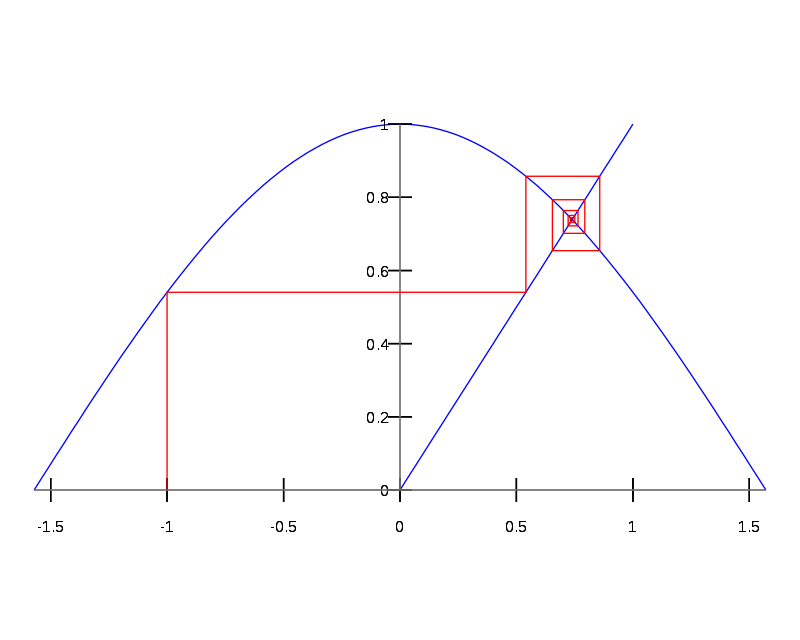

15.3 Fixed-Point Iteration

Fixed-point iteration is another algorithm for finding function zeros. To begin, the function of interest must be rearranged to look similar to the function below. \[ x = \cos(x) \] As opposed to the alternate form:

\[ y(x) = x - \cos(x) = 0 \]

If the function cannot be manipulated to look like the form above, it is not a good candidate for the fixed-point iteration method. Once the equation is in this form, the user must choose an initial guess for the zero just as with the other algorithms. The algorithm then proceeds by using the initial guess to evaluate the right hand side of the equation. This process repeats with the new guess always being used to evaluate the function until \(|x - \cos(x)|\) is sufficiently small. The equation given above is an example of a transcendental equation, or an equation that cannot be solved using algebraic techniques. No matter how hard you try, you’ll never come up with an expression that solves this equation.

The code cell below shows the fixed-point iteration algorithm used to find the zeros of the function mentioned above: \(x = \cos(x)\)

import numpy as np

x = 3

count = 0

while abs(x - np.cos(x)) > 1e-5:

x = np.cos(x)

count += 1To find the zeros of a function that is not in the form: \(x = f(x)\), you must first algebraically manipulate the equation to take this form. For example, the following equation

\[ 2x^3 - 2 x - 5 =0 \]

Is equivalent to: \[ x = (x+{5\over 2})^{1\over 3} \]

And the fixed-point iteration algorithm can now be used to solve for \(x\):

import numpy as np

x = 3

count = 0

while abs(x - (x+5/2)**(1/3)) > 1e-8:

x = (x+5/2)**(1/3)

count += 1

if count > 10000:

break15.4 Finding Extrema

Any of the methods discussed above can be used to find extrema by finding the zeros of the derivative. For example, to find the minimum of this function:

\[ y(x) = 5 x^3 - 2x^2 - 8 x + 2 \]

We can find the zeros of the derivative function:

\[ y'(x) = 15 x^2 - 4 x - 8 \]

And then used fixed-point iteration, or any of the other methods discussed, to solve this equation for \(x\):

import numpy as np

x = 3

count = 0

while abs(x - np.sqrt(4/15 * x + 8/15)) > 1e-8:

x = np.sqrt(4/15 * x + 8/15)

count += 1

if count > 10000:

print("Too many iterations. Results may not be correct!")

break

print(x)0.875701916861266915.5 Exercises

- Use the bisection method to find the zeros of the function \[ y(x) = 8x^3 - 15 x^2 - 4 x + 5\] Hint: This function has three zeros; one in the region [-1,0], one in the region [0,1] and another in the region [1.5,2.5]

# Code Here- Use Newton’s method to find the zero for the following function (slightly different from the one in problem 1) \[y(x) = 8x^3 - 15 x^2 - 4 x - 5\] then use

sympyto solve the equation and verify that the answer are the same.

Answer: You should find that the zero is located at \(\approx 2.2257\)

Hint: The derivative of this function is: \[ y'(x) = 24 x^2 - 30 x - 4 \]

# Code Here- Use fixed-point iteration to solve the following equation\[\left({1\over 2}\right)^x = x^2\]

# Code Here- Use Newton’s method to solve the following equation \[e^x = \cos(x)\] Note: This function has three solutions; one in the region [-5,-4], one in the region [-2,-1] and another in the region [-1,1]. See if you can find all of them by varying your initial guess.

# Code Here- Use a method of your choosing to find the minimum of the function \[y(x) = 10 x^2 \cos(\sqrt{x})\] Hint: If you choose to use the bisection method, you’ll need the first derivative of this function. If you choose to use Newton’s method, you’ll need the first and second derivatives of this function. Both of these functions are given below.

\[ y'(x) = 20 x \cos(\sqrt{x}) - 5 x^{3/2} \sin(\sqrt{x}) \]

\[ y''(x) = 20 \cos(\sqrt{x}) - {5\over 2} x \cos(\sqrt{x}) - {35\over 2} \sqrt{x} \sin(\sqrt{x}) \]

# Code Here